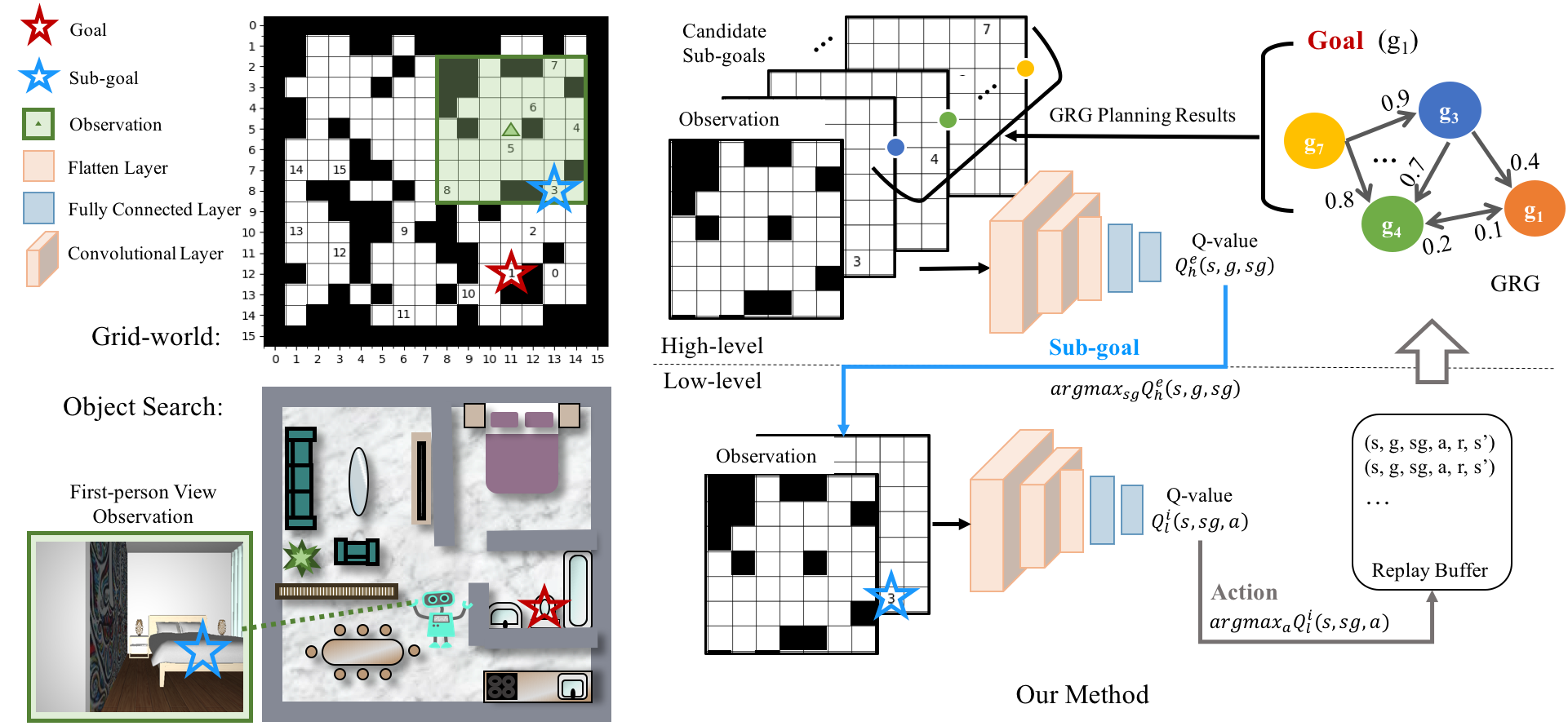

We present a novel two-layer hierarchical reinforcement learning approach equipped with a Goals Relational Graph (GRG) for tackling the partially observable goal-driven task, such as goal-driven visual navigation. Our GRG captures the underlying relations of all goals in the goal space through a Dirichlet-categorical process that facilitates: 1) the high-level network raising a sub-goal towards achieving a designated final goal; 2) the low-level network towards an optimal policy; and 3) the overall system generalizing unseen environments and goals. We evaluate our approach with two settings of partially observable goal-driven tasks --- a grid-world domain and a robotic object search task. Our experimental results show that our approach exhibits superior generalization performance on both unseen environments and new goals.

|

unseen environments seen goals |

unseen environments unseen goals |

||

|

kitchen (toaster) |

living room (painting) |

|

bedroom (mirror) |

bathroom (towel) |

|

unseen environments unseen goals |

|

|

seen environment seen goal (television) |

seen environment unseen goal (ottoman) |

|

unseen environment seen goal (television) |

unseen environment unseen goal (music) |

If you find this work helpful, please consider citing:

@InProceedings{ye2021hierarchical,

author={Ye, Xin and Yang, Yezhou},

title={Hierarchical and Partially Observable Goal-driven Policy Learning with Goals Relational Graph},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

month = {June},

year = {2021}

}